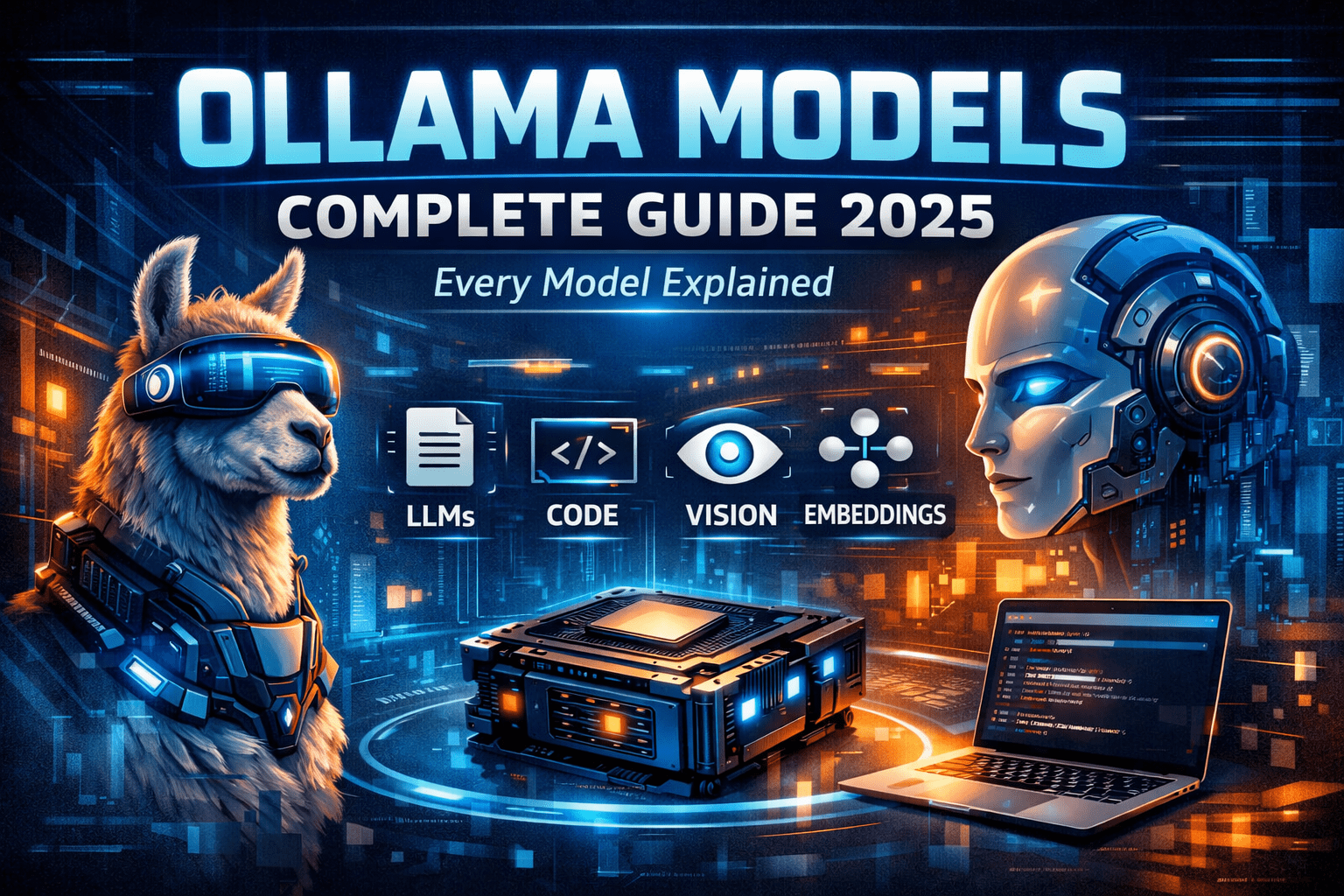

Ollama Models: The Complete Guide (2025 Edition)

Local large language models (LLMs) are evolving rapidly, and Ollama has become one of the most powerful platforms for running AI models locally. Whether you are a developer, researcher, or AI enthusiast, Ollama allows you to run state-of-the-art models directly on your own hardware — without relying on cloud APIs.

This article provides a complete and detailed overview of all Ollama-supported models in 2025, including general LLMs, reasoning models, coding models, vision models, embeddings, and lightweight edge models.

Reference & inspiration:

PracticalWebTools – Ollama Models Complete Guide 2025

Official Ollama Model Library

What Is Ollama?

Ollama is a local AI runtime that simplifies downloading, running, and managing large language models on macOS, Linux, and Windows.

- Runs fully offline

- CPU and GPU support

- Simple CLI and REST API

- Model versioning

- Privacy-first AI execution

Ollama Model Categories

- General-purpose language models

- Reasoning and math-focused models

- Code-specialized models

- Vision and multimodal models

- Embedding models

- Lightweight and edge-optimized models

General-Purpose Language Models

LLaMA 2

Developer: Meta

Parameters: 7B, 13B, 70B

Context Length: 4K tokens

LLaMA 2 is one of the most popular open-source language models and offers strong performance for general reasoning, conversation, and content generation.

Best for:

- Chatbots

- Content creation

- General reasoning tasks

Ollama: LLaMA 2

Meta AI – LLaMA

LLaMA 3

Developer: Meta

Parameters: 8B, 70B

Context Length: Up to 8K tokens

LLaMA 3 delivers major improvements in reasoning, instruction following, and output quality, making it one of the best all-around models available in Ollama.

Best for:

- Production chat systems

- Long-form content generation

- High-quality reasoning

Mistral

Developer: Mistral AI

Parameters: 7B

Context Length: 8K tokens

Mistral is designed for speed and efficiency while maintaining excellent reasoning capabilities, making it ideal for local and low-latency use cases.

Best for:

- Fast inference

- Lower-memory systems

- Real-time applications

Mixtral

Developer: Mistral AI

Architecture: Mixture of Experts (MoE)

Parameters: 8x7B, 8x22B

Context Length: 32K tokens

Mixtral dynamically activates expert subnetworks, providing powerful reasoning while reducing compute costs.

Best for:

- Advanced reasoning

- Long-context conversations

- Enterprise-grade workloads

Reasoning and Math-Focused Models

DeepSeek-R1

Developer: DeepSeek

Parameters: 7B, 32B, 70B

DeepSeek-R1 specializes in logical reasoning, mathematical problem solving, and structured analytical tasks.

Best for:

- Math reasoning

- Logical analysis

- Scientific workflows

Qwen

Developer: Alibaba

Parameters: 7B, 14B, 72B

Context Length: Up to 32K tokens

Qwen models offer strong multilingual support and excellent reasoning for technical and academic domains.

Best for:

- Multilingual applications

- Research tasks

- Structured output generation

Code-Specialized Models

Code LLaMA

Developer: Meta

Parameters: 7B, 13B, 34B

Code LLaMA is optimized for programming-related tasks including generation, refactoring, and debugging.

Best for:

- Code generation

- Debugging

- Refactoring

DeepSeek-Coder

Developer: DeepSeek

Parameters: 6.7B, 33B

Context Length: Up to 16K tokens

DeepSeek-Coder is designed for long-context code understanding and accuracy.

WizardCoder

Developer: WizardLM

Parameters: 15B, 34B

WizardCoder excels at structured explanations and instruction-based coding tasks.

Vision and Multimodal Models

LLaVA

Parameters: 7B, 13B

LLaVA combines image understanding with language generation.

BakLLaVA

A lightweight multimodal model optimized for efficiency.

Embedding Models

Nomic-Embed-Text

Developer: Nomic AI

Designed for semantic search, vector databases, and RAG pipelines.

mxbai-embed-large

Developer: Mixedbread AI

Lightweight and Edge Models

Phi-2

Developer: Microsoft

Parameters: 2.7B

TinyLLaMA

Parameters: 1.1B

Hardware Requirements Overview

| Model Size | Recommended Hardware |

|---|---|

| ≤ 7B | CPU or 8GB RAM |

| 13B | 16GB RAM or GPU recommended |

| 33B | 24GB+ VRAM |

| 70B+ | High-end GPU (A100 / H100 class) |

Final Thoughts

Ollama’s ecosystem in 2025 offers unmatched flexibility for running AI locally. From lightweight edge models to massive reasoning-focused LLMs, Ollama makes private, offline AI accessible to everyone.

If you are serious about local AI, Ollama is no longer optional — it is essential.