Building an Automated Self-Optimizing RAG System: How I Made AI That Tracks and Fixes Its Own Performance

Introduction:

Retrieval-Augmented Generation (RAG) has become a game-changer in AI applications by combining the power of large language models with external knowledge bases. But as data grows and real-world demands increase, maintaining RAG systems at peak performance becomes a serious challenge. In this post, I’ll walk you through how I built an automated RAG system that monitors its own performance, detects degradation, and rebuilds itself — all seamlessly and without manual intervention.

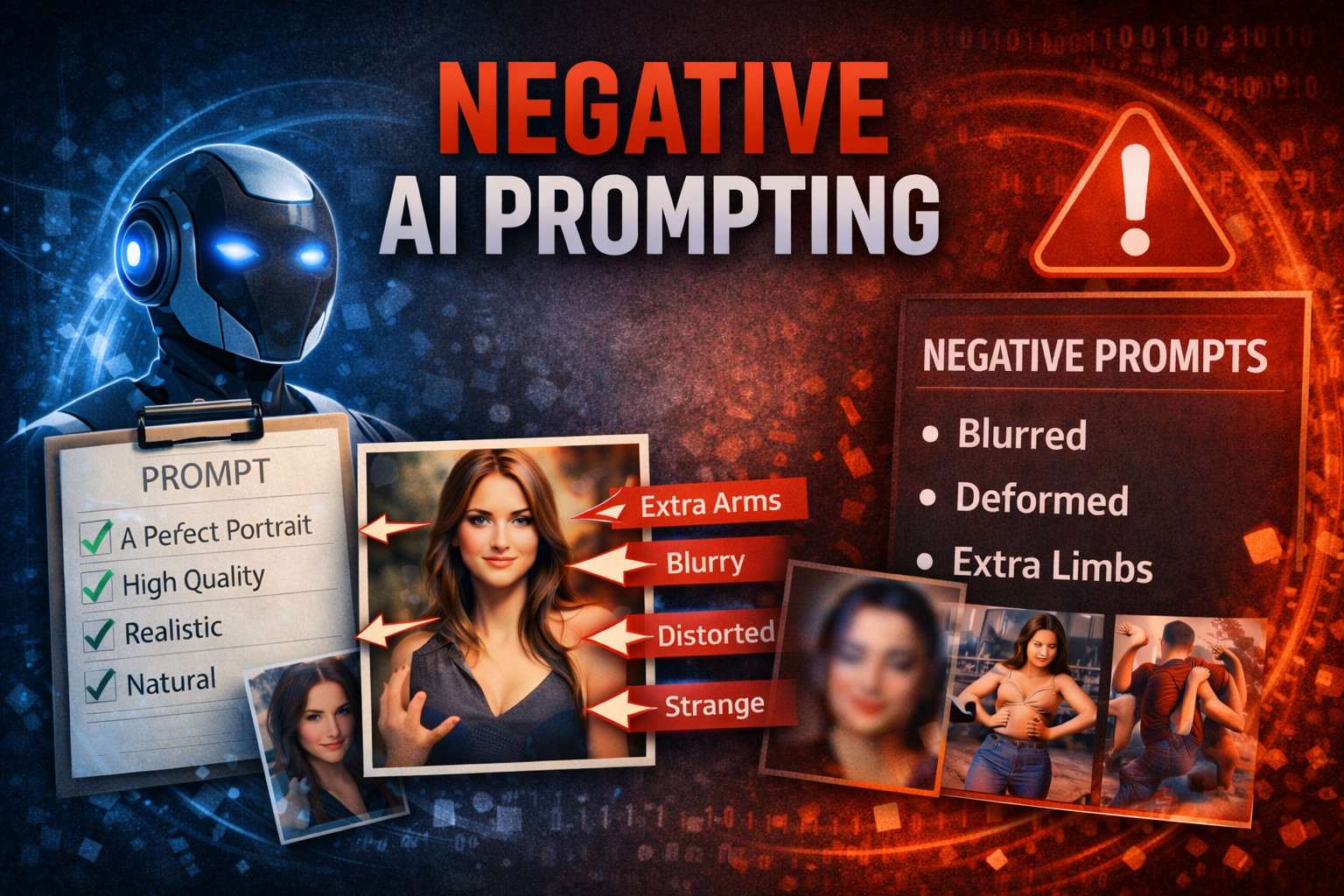

Why Automation in RAG?

Managing multiple RAG systems manually can be tedious and error-prone. Over time, vector stores like Qdrant can accumulate noise, duplicates, or poorly embedded chunks — slowing down similarity searches and reducing answer quality. The traditional approach of exporting data, chunking, embedding, and importing again is time-consuming and prone to mistakes.

My Approach: A Self-Healing RAG System

I created a system that continuously monitors the performance of my RAG setups. When performance drops below a set threshold, the system automatically triggers a cleanup and rebuild:

* Data Export: Extract the current knowledge base

* Chunking: Re-chunk documents optimally for embedding

* Embedding: Re-embed chunks using Ollama LLM or other embedding services

* Re-import: Clear and reload the vector database (e.g., Qdrant) with fresh data

This pipeline runs automatically in the background, ensuring my RAG systems stay fast and reliable.

Technical Architecture

The core components include:

* Multiple Ollama API servers: I run 35+ Ollama API instances distributed to handle LLM inference and embedding at scale.

* PHP backend: Manages data exports, chunking, embedding, and vector store interactions.

* Vector database: Using Qdrant as the vector search backend, supporting fast similarity queries.

* Automated monitoring: Tracks query latency and accuracy to trigger rebuilds as needed.

Chunking Challenges

Optimizing chunk size is critical: too small, and the model loses context; too large, and performance suffers. I designed a flexible PHP chunker that processes roughly 125,000 bytes (~50,000 tokens) across 46 WordPress posts, striking a balance for effective embedding.

Why Build My Own System?

Existing platforms didn’t fully meet my needs. I had access to extensive server resources through ispDashboard, allowing me to experiment and iterate quickly. Plus, building from scratch lets me customize every step, integrate Ollama tightly, and push boundaries with automation and self-optimization.

What’s Next?

I’m continuously improving the system — adding more advanced monitoring, richer user interfaces, and even integrating screenshot-based diagnostics to collaborate better with AI assistants.